Biometric technology covers a range of human-based identifiers—fingerprint scanning, facial recognition, iris authentication, voice ID, even the way you walk. Just a decade ago, this stuff was reserved for spy movies and top-secret labs. Now, it’s part of everyday life. Your phone unlocks with your face. Airports use biometrics to speed up security. Some banks let you authorize transactions with your voice.

The shift wasn’t overnight, but it’s now undeniable. Biometrics are everywhere because they offer a faster, more secure, and user-friendly way to prove identity. In 2024, they’re not just convenient—they’re foundational. As more of our lives move online and concerns about data security grow, biometric systems are becoming a frontline defense—and a daily touchpoint for millions.

Biometrics are no longer just a futuristic nice-to-have. They’re quietly replacing the old password model, and for good reason. Fingerprints, facial recognition, voice ID—these tools aren’t just faster, they’re harder to fake. The traditional username-plus-password combo is getting phased out in favor of authentication that’s tied to who you are, not what you remember.

Two-factor authentication (2FA) and multi-factor authentication (MFA) act as the bridge here. They’re security-minded, but still a bit clunky. Biometrics adds a layer of both ease and strength. You’re no longer juggling one-time codes or reset links. Just a glance or a touch and you’re in.

Real-world examples point to a solid trend. Banks have moved to fingerprint or facial ID for access to mobile apps. Hospitals and clinics are swapping passwords for retina and voice scans to secure patient data. Even ride-share apps are experimenting with facial verification before drivers start a shift. It’s not just a security win—it’s faster and more user-friendly.

The move toward bio-verification isn’t about flashy tech. It’s about reducing friction while boosting trust. That combo is winning.

AI Is Speeding Up Workflow Without Replacing Humans

The rise of generative AI has changed the way vloggers work, but it hasn’t made them obsolete. Far from it. Creators are using tools like ChatGPT and automated editors to cut down on scripting time, brainstorm titles, and trim hours off post-production workflows. These tools are fast, but they’re only as good as the person using them.

The trick is knowing where to draw the line. Top vloggers lean into AI for the grunt work—transcriptions, tagging, basic edits—but keep the big stuff human. Voice. Humor. Presence. You can’t fake that with code. If your content sounds robotic or generic, audiences drop off. Fast.

The opportunity lies in delegation, not replacement. Let AI handle the repetitive tasks so you can focus on being present, creative, and responsive. That balance is what keeps your vlog feeling fresh instead of factory-made.

Behavioral Biometrics, Emotion Tech, and the Rise of the Gray Area

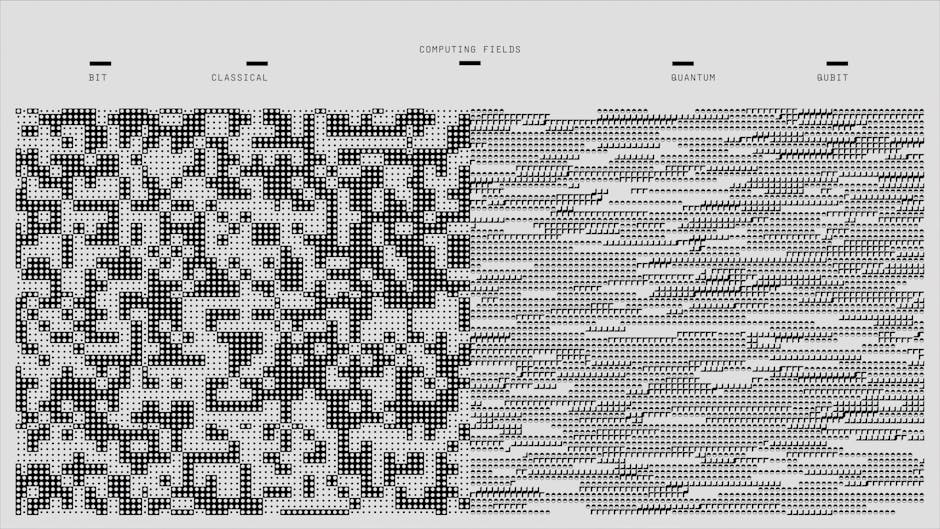

The way you type, swipe, walk, or glance is no longer just your business. Behavioral biometrics are everywhere now, packaged as the next step in seamless user experience. Platforms, devices, even apps are logging micro-signals from users to verify identity, predict behavior, or tailor content—all in real time.

Biometric wearables have stepped beyond fitness tracking. Smartwatches and rings aren’t just measuring heart rate; they’re being tapped for continuous authentication. If your gait feels off or your grip changes, the system notices. It makes security frictionless, yes, but it also means you’re being scanned more often than you’re aware.

Then there’s emotion recognition. Cameras track subtle facial cues; microphones listen for tone shifts. It’s not sci-fi—it’s deployment-ready. Tech can now guess if you’re bored, sad, or mad. Brands love it. Ethicists don’t. Vloggers using this tech must tread lightly. Surveillance and sentiment analysis have a fine line between insight and intrusion.

Legally, it’s murky. Consent isn’t always clear, especially when data is passive. Viewers rarely opt into being analyzed, and laws haven’t caught up. For creators, this creates a moving target. Use these tools, and you risk backlash or worse. But ignore them, and you might fall behind in a space that rewards optimization.

The bottom line: behavioral data is powerful, but it’s also personal. As creators fold these tools into production, they need to be transparent, thoughtful, and ready for pushback.

Biometric data used to feel like something limited to spy movies or high-end tech, but it’s everywhere now—face unlock, voice recognition, movement tracking. And with it comes a serious question: who actually owns that data? If a platform authenticates you with your face, are you giving them permission to keep, sell, or analyze it forever? The lines are blurry, and the fine print rarely offers peace of mind.

In 2024, data breaches aren’t just about passwords and emails—they’re about physical identity markers. A leak involving facial scans or fingerprint records doesn’t just impact privacy; it erodes trust in the tools creators and platforms rely on. These breaches are harder to ‘change’ because you can’t just reset your face.

Then there’s the algorithmic elephant in the room. More surveillance tools are using facial data to gauge emotions, behavior, even the ‘marketability’ of creators. The issue? These systems often come baked with bias. Creators from marginalized groups are more likely to get flagged inaccurately or deprioritized due to skewed training data. There’s a growing call for clearer ethics, tighter regulations, and transparency in how surveillance tech is used—not just by governments, but by the platforms hosting your content loud and proud.

As emerging tech reshapes the creator landscape, it’s not just about faster tools and smarter platforms. Companies, developers, and viewers all have skin in the game. The line between creator and algorithm is blurring, and that raises real questions about responsibility.

For developers, it’s time to build with intention. Tools that edit, suggest, and even co-create need transparency baked in. Who owns the data? How is it used? And what feedback loops are shaping what creators see and do? These aren’t just tech issues—they’re trust issues.

Companies rolling out AI or automation in the vlogging world should look beyond speed and scale. Ethical frameworks are essential. Clear disclosures, consent mechanisms, and fair-use models are no longer nice-to-haves—they’re the cost of doing business in a media space that’s becoming more personalized, more predictive, and more algorithmically driven.

Consumers, too, have a role. Engaging with content means contributing to the loop that trains the system. Audiences need to be aware of how their clicks, comments, and view times affect creator visibility and income.

Bottom line: the future of vlogging is intelligent, yes—but it also has to be accountable.

(Learn more in-depth at Top 10 Emerging Technologies Revolutionizing 2024)

Biometric tech isn’t just a flashy feature anymore—it’s becoming the backbone of how people prove who they are. Face scans, voice signatures, even heartbeat patterns are replacing passwords and ID cards. It’s smoother, faster, and in many cases, tougher to fake. For vloggers and digital creators, this changes how fans access content, how platforms track engagement, and how personal security is handled.

But with power comes responsibility. It’s easy to add fingerprint locks or facial recognition to an app, but it’s harder to make sure they’re used right. Creators and developers alike need to ask tougher questions. Is this feature consensual? Is the data encrypted? Who controls it tomorrow?

This isn’t about keeping up with tech for tech’s sake. It’s about knowing that every digital interaction is inching closer to the skin. In 2024, the biggest leap won’t be another app—it’ll be in how we define identity. The future isn’t just digital—it’s personal.

Lorissa Ollvain is a tech author and co-founder of gfxrobotection with expertise in AI, digital protection, and smart technology solutions. She is dedicated to making advanced technology accessible through informative, user-focused content.

Lorissa Ollvain is a tech author and co-founder of gfxrobotection with expertise in AI, digital protection, and smart technology solutions. She is dedicated to making advanced technology accessible through informative, user-focused content.