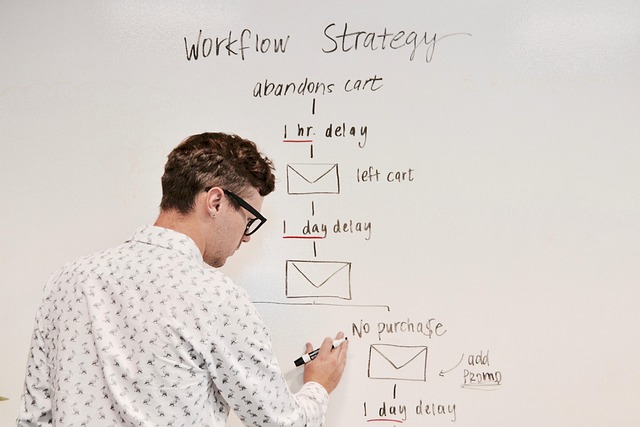

AI Is Speeding Up Workflow Without Replacing Humans

Vloggers used to spend hours piecing together intros, trimming raw footage, and scripting storylines. Now AI tools can do most of that in minutes. From automated video editing and captioning to script generation and trend analysis, creators are leaning hard into generative tech to buy back time. But while the machines are fast, they’re not creative thinkers.

Top creators aren’t handing over the reins completely. They’re using AI for the grunt work and saving their voice, tone, and creative judgment for what really matters. The risk, of course, is drifting into sameness. AI tools pull from the same data sets, making it easy to fall into content that feels templated. The smart move is using automation without losing personality.

Creators serious about long-term brand relevance are staying sharp on what to delegate. First drafts? Sure. Edits for pacing? Absolutely. But the human touch still runs the show when it comes to storytelling and connection.

Building Ethics Into the Design Process

Start Early, Stay Consistent

One of the most effective ways to ensure ethical product development is to address potential issues from the very beginning. Waiting until launch or late-stage testing can lead to costly corrections or damaging oversights. Ethical review should be a steady checkpoint, not an afterthought.

Key strategies to implement early:

- Incorporate ethical assessments at each design phase

- Ask hard questions about impact, safety, and unintended consequences

- Flag concerns before building, not after

Create Dedicated Ethics Teams

A centralized ethics team can provide oversight, structure, and accountability throughout the development cycle. Without a clear owner, ethical considerations often fall between the cracks.

Components of a strong ethics team:

- Cross-functional members from engineering, design, user research, and legal

- Clear authority to pause or adjust product development

- Defined workflows for evaluating ethical concerns

Test with Real-World Representation

Diverse testing environments are essential. Products that perform well in isolated lab conditions can still fail when exposed to the broad diversity of real-life users.

Incorporate diversity into testing by:

- Including testers from different age groups, cultures, and ability backgrounds

- Running simulations for edge cases and unintended user behaviors

- Collecting feedback beyond traditional quantitative metrics

Ethics as Part of Product-Market Fit

Ethical design is more than a PR strategy or checklist. It’s part of a product’s value to users. In 2024, buyers and platforms are actively scrutinizing how products operate in the real world.

Why ethics must align with business strategy:

- Ethical missteps can erode trust and lead to user abandonment

- Regulators are increasingly stepping in where companies fall short

- Long-term product-market fit includes responsible use, not just market demand

Why AI Ethics Isn’t Just Theoretical Anymore

AI isn’t locked away in labs anymore. It’s making medical recommendations, approving loans, screening job applicants, and guiding police patrols. That makes AI ethics a front-line issue, not a niche debate. When algorithms shape real outcomes for real people, things like bias and transparency stop being academic—they become urgent.

Take automated decision-making. If a vlogging platform flags your channel as harmful content because of one misunderstood keyword, there goes your reach and income. No human, no appeal, just a cold black box deciding your fate. This isn’t paranoia—it’s already part of the system.

The tools are impressive, but speed doesn’t cancel out responsibility. Vloggers, creators, and tech companies are standing at the edge of something powerful. Innovation is the gas pedal, but ethics is the steering wheel. The question isn’t whether AI tools are useful. It’s how we build and use them without crashing into people’s lives.

When Ethics Are Ignored, Reputations Suffer

High-Profile Missteps

In an era where transparency is expected, ethical missteps can lead to swift and lasting reputational damage. Audiences are more informed and vocal, and companies that fail to recognize their ethical blind spots often learn the hard way.

Notable examples of reputational damage include:

- Facebook (Cambridge Analytica): Mishandling of user data led to public distrust, government scrutiny, and lasting damage to the brand’s credibility.

- Boeing: Ignoring internal safety concerns during the development of the 737 MAX caused not only tragic consequences but also a massive loss in consumer confidence.

- H&M: A misjudged advertisement considered racially insensitive led to immediate backlash, forcing the company to issue a public apology and reassess its marketing strategies.

These examples show that even the biggest global brands are vulnerable when they neglect ethical foresight.

Who’s Getting It Right

While some companies stumble, others are setting new standards by embedding ethics into their brand purpose.

Companies leading with ethics include:

- Patagonia: Known for its environmental activism, the company donates a portion of its profits and consistently aligns its messaging with its sustainability mission.

- Ben & Jerry’s: Actively takes public stances on social justice issues, ensuring their values align with consumer expectations.

- Salesforce: Emphasizes equality and ethical data practices, positioning itself as a trusted tech brand in a skeptical industry.

These brands demonstrate how ethics can be a competitive advantage.

Key Takeaways

Ethical decisions are no longer private boardroom matters. They shape brand identity and public perception. The line between company values and customer loyalty is clear.

Lessons to remember:

- Ethical blind spots carry high reputational costs

- Transparency and accountability earn long-term trust

- Proactive ethical leadership is no longer optional

In 2024 and beyond, brands that succeed will be those that anticipate ethical pressure, respond with integrity, and embed values into every level of decision-making.

AI isn’t just a shiny toolset for vloggers—it brings real ethical questions that are only getting louder. First, bias in training data is still a massive blind spot. If a model learns patterns from a skewed dataset, the content or decisions it helps create reflect those same inequalities. This matters because AI already shapes what gets recommended, highlighted, and even monetized. Creators who don’t match those patterns might get sidelined before they get seen.

Then there’s the issue of transparency. Most creators have no idea how or why a platform’s algorithm pushes one video and buries another. That mystery can kill momentum, especially when creators rely on these systems to earn a living. Explainability—actually knowing how AI makes its calls—could level the field, but most platforms aren’t rushing to open the black box.

Privacy and surveillance are also creeping in. AI tools often track behavior at microscopic levels to personalize content and ads. For some, that’s helpful. For others, it’s invasive. Drawing the line is tricky, but 2024 is pushing those conversations closer to the frontline.

Finally, when AI screws up—when it spreads misinformation, mislabels a video, or suppresses voices—who takes the fall? The platform? The developer? The creator? Right now, answers are vague. But as automation expands across the creator economy, accountability shouldn’t be optional.

The Role of Policy, Regulation, and Public Pressure

AI tools are everywhere in the vlogging ecosystem now. Editing, scripting, thumbnails—automation is the quiet engine running behind the scenes. But ethical use of AI doesn’t happen on its own. Creators, platforms, and audiences all have a role to play, and 2024 is making that clearer than ever.

Governments are starting to catch up. Regulations around transparency, data use, and misinformation are landing across regions. It’s not just tech companies being held accountable anymore. If a vlogger uses AI to generate content that misleads or misrepresents, that could invite scrutiny—and not just algorithmically.

Ethical AI isn’t just a backend issue owned by developers. Creators making daily content choices are now frontline actors. From labeling AI-generated material to deciding when real human voice matters, responsibilities are stacking up quickly. Keeping trust means creators must stay sharp on the ethical front.

At the same time, cross-functional collaboration is becoming a built-in defense. Creators are leaning on editors, moderators, and even legal consultants to help vet practices. It’s not overkill—it’s survival. Audiences notice when ethics are baked in versus slapped on, and smart creators are treating AI not just as a tool, but as terrain that needs navigation.

Ethics aren’t killjoys. They’re the scaffolding that makes vlogging sustainable as AI becomes more embedded. The ones who adapt now will shape how the future of content looks—and how it’s trusted.

Responsible AI: Principles Over Hype

As artificial intelligence continues to power content creation, recommendation engines, editing tools, and moderation features, creators and platforms alike must face a growing truth: smart AI isn’t enough if it isn’t principled. In 2024, ethics in AI won’t just be a technical concern—it will become a true differentiator in the creator economy.

Why Principles Matter Now

While AI tools can supercharge efficiency and growth, they also carry risks when left unchecked. From bias in algorithms to misuse of synthetic media, the cost of ignoring ethical considerations is higher than ever.

- Smart AI without human values can damage trust

- Transparent use of AI builds credibility with audiences

- Ethical blind spots in AI could lead to long-term backlash

Ethics as Strategy, Not Just Compliance

Many creators and firms still treat ethics as an afterthought—or a box to check. But in a saturated digital space, transparency and fairness are becoming key brand pillars. Ethical practices aren’t just morally right; they’re strategically smart.

- Audience trust is now directly tied to ethical behavior

- Brands and sponsors are watching closely

- Clear ethical guidelines can future-proof your channel or company

Global Standards and Shared Responsibility

The AI ecosystem is evolving fast, and eyes are now on global organizations to step in. As platforms implement AI at scale, both creators and tech companies must share responsibility.

- Growing demand for international AI policies

- Calls for shared practices on transparency, data use, and bias mitigation

- Creators who engage with these conversations now will lead the space later

Takeaway: Building with AI is powerful—but aligning it with values is what makes it sustainable.

For a broader take on leadership strategy in tech, check out how innovation really happens when speed matters. The best tech leaders avoid chasing every new trend. Instead, they build systems that respond fast without losing focus. That means clear vision, flexible teams, and tools that adapt quickly—without breaking the mission. If you’re a creator thinking long-term, it’s worth seeing how these principles apply beyond just content. See the full breakdown here: How Tech Leaders Approach Innovation in Fast-Moving Markets

Isaac Lesureneric is a tech author at gfxrobotection focusing on digital security, automation, and emerging technologies. He shares clear, practical insights to help readers understand and adapt to the rapidly changing tech world.

Isaac Lesureneric is a tech author at gfxrobotection focusing on digital security, automation, and emerging technologies. He shares clear, practical insights to help readers understand and adapt to the rapidly changing tech world.