Why Responsible AI Regulation Is More Urgent Than Ever

Artificial Intelligence is advancing rapidly, transforming industries from healthcare to entertainment. But alongside this innovation comes serious concern: how do we ensure AI systems are safe, ethical, and aligned with human values? The need for responsible regulation has moved past theory. It is now a practical necessity.

The Stakes Are Higher Than Ever

AI systems already influence decisions in:

- Hiring and recruitment processes

- Law enforcement and surveillance applications

- Healthcare diagnostics

- Financial lending models

Without proper oversight, these systems can reinforce bias, infringe on privacy, or make opaque decisions that humans can’t easily question.

Balancing Innovation and Public Safety

One of the greatest challenges in AI regulation is finding the right balance between safety and progress. Regulation should not stifle innovation, but the absence of standards can lead to public harm and eroded trust.

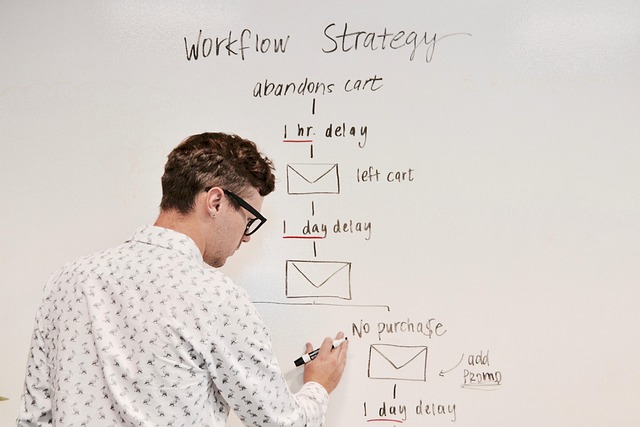

Key priorities for balanced regulation include:

- Encouraging transparent development practices

- Defining accountability for AI-based decisions

- Creating guidelines for bias testing and risk management

- Allowing space for startups and academic research to thrive

The Global Patchwork of AI Policy

Currently, AI regulation is fragmented across regions and industries. Some efforts exist, but no global standard has emerged. This leaves gaps that sophisticated systems can exploit.

Who’s Doing What?

- European Union: Leading with the proposed AI Act prioritizing risk-based frameworks

- United States: A mix of executive guidance and state-level initiatives, but no federal regulation yet

- China: Developing AI parameters aligned with state interests, emphasizing content control

- Other nations: Exploring frameworks but often lacking enforcement procedures

Where the Gaps Exist

- Lack of cross-border enforcement mechanisms

- Undefined standards for AI transparency and explainability

- No globally accepted certification or testing procedures

To make regulation meaningful, coordination must grow among governments, tech providers, and civil society. The conversation is no longer about if AI should be regulated, but how quickly and effectively it can be.

We are at a crossroads where decisions made today will shape the digital foundations of the next century. Getting AI regulation right is not just a tech issue. It’s a human one.

Data, Bias, and Accountability: The Ethics of AI in Vlogging

As AI tools become embedded in how vloggers script, edit, and publish, ethical questions start cropping up fast. First, there’s data privacy. AI runs on data—often yours, sometimes your audience’s. Creators using voice generation or audience analytics need to be sure they’re not unknowingly feeding personal info into black box models. Right now, there’s not much transparency about what’s collected or how it’s used. That’s a risk.

Then there’s bias. AI isn’t neutral. If you’re using an AI tool to clean up video transcripts, suggest titles, or even draft outlines, it may carry baked-in assumptions. These can skew representation—or worse, exclude certain groups altogether. You don’t want to run a channel that works great for some but invisibly pushes others out.

Finally, accountability. If an AI tool falsely labels content, exaggerates a claim, or torpedoes your channel’s reputation, who takes the hit? Spoiler alert—it’s usually you. Creators need to vet what they use and stay hands-on. AI can streamline work, but hands-off use creates a liability trail.

Bottom line: AI helps. But don’t let the convenience become a blind spot.

Government & Policy Think Tanks

The regulatory landscape is finally catching up to AI’s real-world impact. The European Union’s AI Act is leading the charge, laying down rules on transparency, risk classification, and human oversight. In the United States, Executive Orders have started carving out guidelines aimed at responsible development and deployment. These moves aren’t just symbolic—they’re trying to wrestle real control over a fast-moving tech sector and signal that hands-off days are numbered.

Policy analysts are cautiously optimistic. Some praise the EU’s layered approach to risk as a solid blueprint, while others voice concern over how scalable and enforceable it really is beyond big tech hubs. Think tanks across the globe keep pushing for a coordinated effort. Without cooperation and shared standards, AI oversight risks becoming patchy at best, and ineffective at worst. The conversation is shifting from if regulation is needed to how fast we can make it work—globally.

For creators, it’s no longer enough to trust the algorithm and hope for the best. In 2024, the push is on for systems that aren’t black boxes. Platforms and toolmakers are being pressured to show how decisions get made—with plain language that normal people (not just engineers) can understand. When a video gets flagged or throttled, creators want to know why, not guess.

Then there’s the data. AI tools are getting better, but whose input are they learning from? More creators are asking that the datasets behind content tools be inclusive—reflecting different backgrounds, languages, regions, and styles. That doesn’t just make the tech fairer, it actually makes it better at helping vloggers reach their unique audiences.

And finally, there’s the human side. Automation is fast, but it can’t be the last word—especially when content is taken down or demonetized. Real oversight matters. But what creators don’t need is someone vaguely reviewing appeals and rubber-stamping whatever the algorithm decided. People want transparency, fairness, and a feedback loop they can actually engage with.

The vlogging world isn’t anti-tech—it’s anti-mystery. The clearer the rules and systems, the better creators can play the game and grow with it.

The Policy Gap Is Getting Wider

Tech isn’t waiting around for the rules to catch up. Vlogging tools, AI-powered features, and platform updates are evolving faster than regulators can blink. While creators explore new formats and monetization models, policymakers are still trying to define yesterday’s internet. That disconnect is growing, and for vloggers, the risks are real: sudden takedowns, unclear copyright enforcement, or new rules that seem out of touch.

Geopolitical friction doesn’t help. Countries are diverging on digital speech, data privacy, and algorithmic transparency. This affects global creators. A harmless upload in one country might violate laws in another. Creators working across borders need to stay sharp—because a misstep can mean demonetization or even bans.

And then there’s the human factor. Many lawmakers simply don’t understand the platforms they’re trying to regulate. That leads to vague rules and clumsy enforcement, leaving creators to navigate a system that feels reactive and inconsistent. Vloggers looking to stay in the clear will need to track policy updates as closely as they do trends.

Regulation is no longer something creators and platforms can ignore or sidestep. As digital content increasingly intersects with public policy, data privacy, and online safety, collaboration across sectors is no longer optional. Tech companies, creators, advertisers, and regulators need to work together to build standards that serve everyone.

Public input matters. Content ecosystems can’t just be shaped in boardrooms. Civic engagement—through open comment periods, creator advocacy, and digital literacy campaigns—helps ensure policies reflect real-world needs and experiences. The same viewers who boost engagement should also have a voice in shaping the environment where that content lives.

The bottom line: regulation doesn’t have to kill creativity. It provides a stable framework that creators can work within. When done right, it builds trust, protects communities, and strengthens the long-term sustainability of the platforms we all rely on.

Lorissa Ollvain is a tech author and co-founder of gfxrobotection with expertise in AI, digital protection, and smart technology solutions. She is dedicated to making advanced technology accessible through informative, user-focused content.

Lorissa Ollvain is a tech author and co-founder of gfxrobotection with expertise in AI, digital protection, and smart technology solutions. She is dedicated to making advanced technology accessible through informative, user-focused content.